AI in Action: From Hype to Reality – My Journey as Panelist at ISMG Mumbai Cybersecurity Conference

Knowingly or unknowingly, we’re all surrounded by AI. From the moment your smartphone alarm wakes you to the Netflix recommendations before bed, artificial intelligence is silently orchestrating our daily experiences. But here’s the question that keeps business leaders awake: Do the benefits outweigh the risks? And more importantly, how do we capitalize on AI’s opportunities while building the governance frameworks to keep it secure, ethical, and trustworthy?

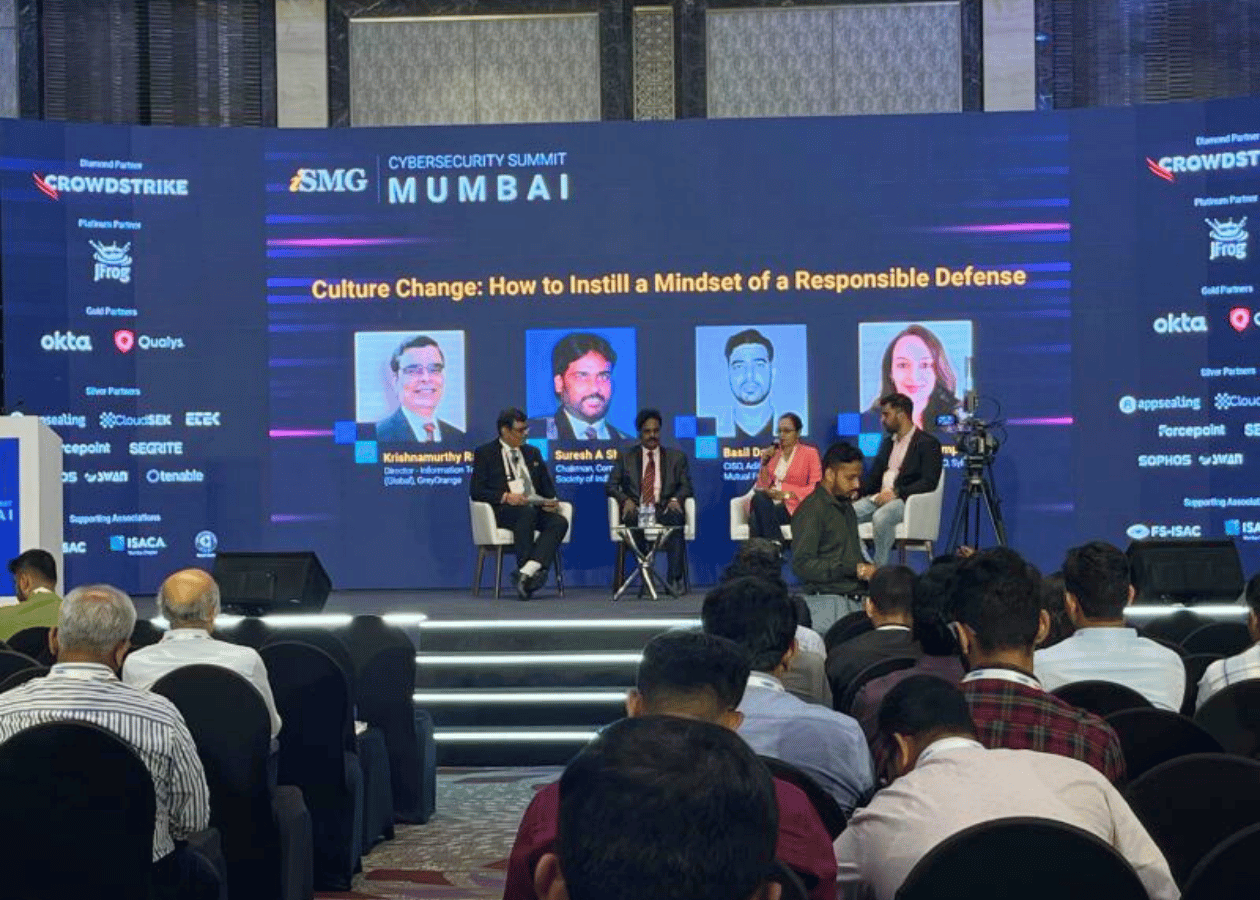

Taking the Stage in Mumbai

I was honored to serve as a panelist at the ISMG Conference in Mumbai, discussing “AI in Action: Identifying and Capitalizing on Business Opportunities through AI” alongside distinguished industry leaders. This wasn’t just another panel about AI’s potential—it was a practical deep-dive into how organizations are actually implementing AI, the culture shifts required, and the governance challenges we must navigate.

The GenAI Renaissance: Excitement With Responsibility

2024 marked the year GenAI put excitement back into artificial intelligence. While AI has existed for decades, generative AI technologies like ChatGPT transformed public perception overnight. Suddenly, executives who never discussed machine learning were asking: “How can we use this?”

Enterprises are betting big on GenAI, but in the rush to innovate, many are overlooking something critical: building an AI culture. Technology alone doesn’t transform organizations—people, processes, and governance do.

During the panel, I emphasized that adopting AI without cultural foundation is like planting seeds in barren soil. You might see initial sprouts, but sustainable growth requires preparation, nurturing, and the right environment.

AI’s Real-World Impact: Efficiency and Cost Reduction

Let me share how AI is making tangible differences across industries:

In Financial Services: AI-powered fraud detection analyzes transaction patterns in milliseconds, identifying anomalies that would take human analysts hours or days. Risk management models predict market movements and portfolio vulnerabilities with unprecedented accuracy.

In Customer Service: Chatbots handle routine inquiries 24/7, providing instant responses while freeing human agents for complex, empathy-requiring interactions. This isn’t about replacing people—it’s about optimizing how we deploy human talent.

In Manufacturing: Predictive maintenance algorithms analyze equipment sensor data, forecasting failures before they occur. Instead of reactive repairs causing production downtime, organizations perform scheduled maintenance, dramatically reducing costs and delays.

In Healthcare: AI assists with diagnostic accuracy, personalizing treatment plans based on patient data, and optimizing hospital resource allocation by predicting admission patterns.

The common thread? AI handles routine, data-intensive tasks, freeing humans for creative, strategic, and emotionally intelligent work that truly drives value.

Building an AI Culture: The Garden Analogy

I told the Mumbai audience that building an AI culture resembles cultivating a garden—it requires careful planning, the right conditions, ongoing care, and community involvement.

Leadership Commitment and Vision

Imagine a family deciding to adopt a healthy lifestyle. If parents aren’t committed and don’t lead by example, children won’t follow. Similarly, AI culture requires leadership that not only endorses AI but actively demonstrates commitment, articulating how AI aligns with organizational goals and long-term strategy.

Education and Training

AI literacy isn’t optional—it’s foundational. Continuous learning opportunities help employees understand AI capabilities, limitations, and applications. This isn’t a one-time workshop; it’s ongoing education that evolves with technology.

Cross-Functional Collaboration

AI initiatives succeed when diverse perspectives converge. Data scientists, business leaders, compliance officers, and domain experts must collaborate seamlessly—like organizing a community event where cooks, decorators, marketers, and volunteers contribute unique expertise toward shared success.

Encouraging Experimentation

Innovation requires psychological safety to experiment and fail. Organizations must foster environments where trying new AI applications is encouraged, even when outcomes are uncertain. Like a child learning to ride a bicycle, each fall is a learning opportunity.

Ethics and Trust as Foundations

Trust is built through consistent, ethical behavior. For AI, this means ensuring transparency, fairness, and accountability. Establishing clear ethical guidelines isn’t just good practice it’s essential for adoption and public trust.

The Governance Imperative

One of the panel’s most engaging discussions centered on AI governance in the absence of comprehensive regulatory frameworks. While regions like the EU are advancing with the AI Act, many organizations operate in regulatory gray zones.

Why Strong Governance Matters

Ensuring ethical use: AI systems significantly impact lives from hiring decisions to medical diagnoses. Without ethical guidelines, we risk deploying biased, discriminatory, or harmful systems.

Mitigating risks: AI can fail or behave unpredictably, causing financial, reputational, or physical harm. Governance frameworks identify, assess, and mitigate these risks.

Building trust: Robust governance builds stakeholder confidence customers, employees, regulators, and investors need assurance that AI is deployed responsibly.

Creating Your Own Governance Standards

When regulatory frameworks lag innovation, organizations must establish internal standards:

Form an AI Ethics Committee: Comprise diverse stakeholders—technologists, ethicists, legal experts, business leaders—to oversee initiatives and assess ethical implications.

Define clear policies: Establish guidelines covering data use, algorithmic transparency, accountability, and bias mitigation.

Implement continuous monitoring: Regular audits ensure AI systems perform as intended and adhere to ethical standards.

Maintain documentation: Document AI models, data sources, decision-making processes, and impact assessments comprehensively.

Engage stakeholders: Involve diverse voices employees, customers, community representatives in shaping AI policies.

Navigating Data Protection in AI Innovation

Asia’s evolving data protection landscape adds complexity to AI adoption. I shared strategies for balancing innovation with compliance:

Privacy by Design: Incorporate data protection principles from AI development’s inception, not as afterthoughts.

Data Anonymization: Implement techniques protecting individual identities while enabling valuable analysis.

Consent Management: Ensure transparent, informed consent for data collection and usage.

Regular Audits: Routinely verify compliance with regional data protection laws.

Cross-border considerations: Navigate international data transfer regulations carefully, especially in APAC’s diverse regulatory environment.

The Challenges of Secure AI Adoption

Securing AI behavior involves addressing multiple interconnected challenges:

Bias and Fairness: AI inherits biases from training data. Ensuring diverse, representative datasets and regular bias audits is crucial for equitable outcomes.

Transparency: Deep learning models often function as “black boxes.” Making AI decisions interpretable builds understanding and trust.

Data Privacy: Protecting sensitive training and operational data prevents misuse and maintains stakeholder confidence.

Security Vulnerabilities: AI systems themselves can be attack targets. Adversarial attacks, data poisoning, and model manipulation require robust defenses.

Regulatory Compliance: Evolving regulations across jurisdictions create complexity requiring proactive compliance strategies.

Key Takeaways for Organizations

I concluded my panel remarks with actionable guidance for attendees:

Embrace AI for strategic efficiency: Identify routine tasks where AI adds value, freeing human talent for creative, strategic work.

Cultivate AI as organizational DNA: Make AI part of your culture through continuous learning, experimentation, and ethical commitment.

Prioritize data privacy and security: Implement robust protections and secure AI systems against evolving cyber threats.

Address bias proactively: Regular audits, diverse training data, and fairness metrics ensure inclusive AI applications.

Demand transparency: Make AI decisions explainable to stakeholders, building trust and facilitating adoption.

Innovate within frameworks: Stay informed about regulations while pushing innovation boundaries responsibly.

Establish governance standards: Even without regulatory mandates, develop internal frameworks upholding high ethical standards.

The Mumbai Cybersecurity Energy

What made this conference exceptional was the energy and engagement from Mumbai’s Cybersecurity community. The questions were incisive, the discussions were substantive, and the commitment to responsible AI innovation was palpable.

India’s technology sector is at an inflection point poised to leverage AI for transformative growth while establishing governance models that protect citizens and build global trust. Being part of these conversations reinforced my belief that the future of AI isn’t predetermined by Silicon Valley alone; it’s being shaped by diverse voices across global innovation hubs.

AI’s True Potential: Technology Meets Humanity

As I told the Mumbai audience, AI is a powerful tool, but its true potential unlocks when we combine it with human creativity, ethics, and collaboration. Technology alone doesn’t create value people using technology thoughtfully, ethically, and strategically do.

The path forward requires balancing innovation with responsibility, efficiency with ethics, automation with empathy. Organizations that master this balance won’t just survive the AI revolution they’ll lead it.

Working on AI governance frameworks, responsible AI adoption, or building AI culture in your organization? Let’s connect and explore how we can advance AI innovation with ethics and security at the foundation.

By – Pooja Shimpi | Cybersecurity GRC & AI Governance Advisor